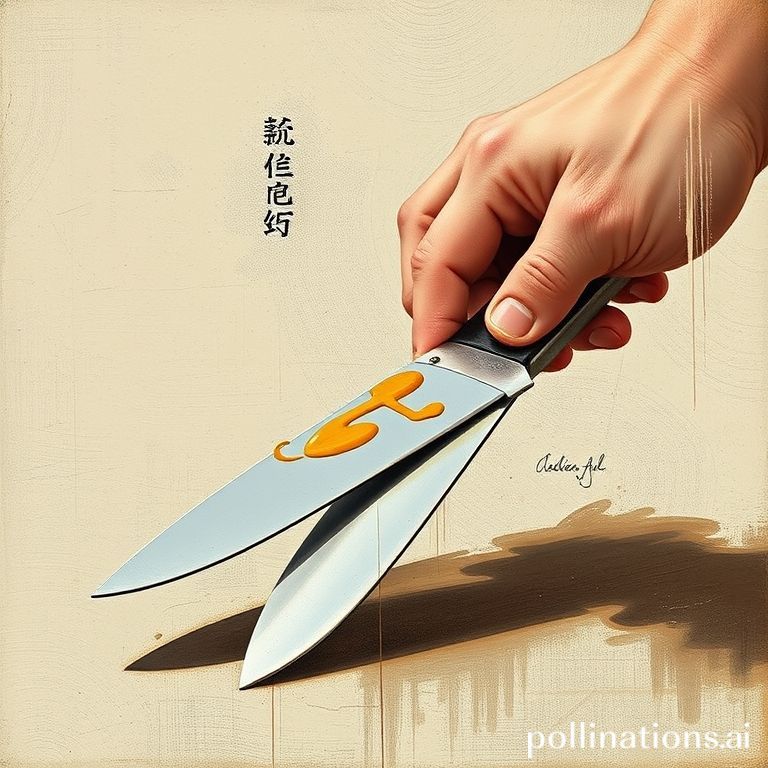

In the world of craftsmanship, a truly sharp knife isn’t just born that way; it’s maintained. It’s honed, stropped, and sharpened against another surface, often a whetstone, or even another blade, until its edge is impossibly keen. What if we told you that the most advanced artificial intelligence models are evolving in much the same way – not in isolation, but by ‘sharpening’ each other?

The traditional view of AI development often focuses on a single model, trained on a massive dataset, striving for peak performance. While this approach has yielded incredible results, the next frontier in AI isn’t about isolated brilliance, but about interconnected systems that push each other to new heights, much like an endless evolutionary arms race.

Consider the groundbreaking Generative Adversarial Networks (GANs). Here, two neural networks are pitted against each other: a ‘Generator’ that creates synthetic data (like fake images) and a ‘Discriminator’ that tries to distinguish real data from the Generator’s fakes. The Generator’s goal is to create fakes so convincing that the Discriminator can’t tell the difference, while the Discriminator’s goal is to become so adept that it catches every fake. This adversarial dance is the perfect example of AI models sharpening each other. As the Generator gets better at faking, the Discriminator is forced to become more discerning, and vice versa. Each failure, each successful deception, makes the other model smarter and more capable, leading to an astonishing improvement in the quality of generated content.

This isn’t just limited to creating realistic images. We see similar dynamics in reinforcement learning. Think of AlphaGo, Google DeepMind’s AI that mastered the game of Go. Initially, it learned from human games, but its true leap forward came when it started playing against *itself* – AlphaGo Zero. By continuously sparring with improved versions of itself, it explored strategies no human had ever conceived, reaching superhuman performance. Each iteration was a ‘knife’ sharpening a newer, better version of the same knife.

Beyond direct competition, this ‘mutual sharpening’ also occurs through more collaborative means. Transfer learning, where a model pre-trained on a vast general dataset provides a foundational ‘edge’ for another model to specialize in a niche task, is a form of this. The specialized model then refines this inherited knowledge, sometimes even discovering new insights that could theoretically inform and improve the general model back. We also see this in the development of robust AI. Researchers design ‘adversarial examples’ – subtle perturbations to inputs designed to trick a model. When a model is shown these examples, it’s forced to learn to become more resilient and less susceptible to manipulation, essentially ‘sharpening its defenses’ against cunning attacks.

The implications of this self-improving, mutually refining paradigm are profound. It accelerates innovation, making models more robust, efficient, and capable of handling complex, real-world variability. It fosters a continuous learning environment where intelligence isn’t static but fluid and ever-evolving. Just as a blacksmith repeatedly folds and hammers steel to remove impurities and create a blade of unparalleled strength and sharpness, AI models are now forging their own intelligence through iterative, interactive refinement.

The future of AI isn’t just about building bigger models; it’s about building smarter, interconnected ecosystems where every component contributes to the collective intelligence, constantly pushing the boundaries of what’s possible. The knives are indeed sharpening, and the future of AI looks incredibly keen.